AI in Chemical Engineering: Why Trust Matters as Much as Technology

Mo Zandi, Gihan Kuruppu and Brent Young argue that without cultural alignment, workforce engagement and ethical safeguards, AI adoption in process industries will falter

Quick read

- Trust is critical: AI adoption fails without workforce engagement and cultural alignment – fear and misunderstanding can cause active resistance

- Data quality underpins success: Incomplete, inconsistent, or manipulated data undermines AI models, making integrated, transparent data practices essential

- Ethics and governance matter: Regulatory gaps and ethical blind spots demand proactive frameworks, training, and accountability to ensure responsible AI use

WHEN a worker at a Sri Lankan glove factory deliberately cut the data cable of a newly delivered robot, it wasn’t technology that failed – it was trust. The incident revealed a truth that engineers and managers across process industries need to grasp: the greatest challenges in adopting artificial intelligence (AI) are not only technical, but also ethical and cultural. As chemical engineers, we are uniquely placed to ensure AI delivers value while safeguarding people, processes and society. But doing so demands an honest reckoning with data quality, workforce fears and governance gaps.

Artificial Intelligence (AI) is transforming the manufacturing landscape. From process optimisation to predictive maintenance, the potential rewards are enormous: higher efficiency, lower costs and faster innovation. Yet, the Sri Lankan glove factory incident illustrates that even the most advanced AI systems cannot succeed without human trust, organisational alignment and rigorous governance.

Bridging the ethics gap

Current research demonstrates a significant gap between AI development and the exploration of ethical implications, particularly in process manufacturing.

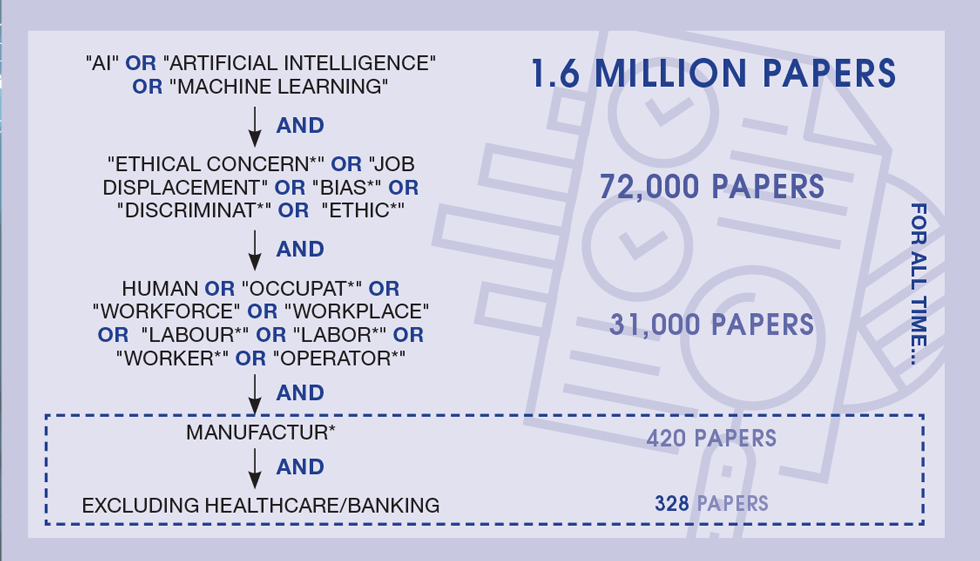

A literature review undertaken in June 2025 revealed striking disparities. While over 1.6m academic papers addressed AI broadly, fewer than 72,000 discussed associated ethical concerns. Narrowing the focus further to the human workforce implications in manufacturing contexts yielded fewer than 400 papers (less than 0.02%), highlighting an alarming oversight in ethical considerations within process manufacturing specifically.

Recognising this gap, a collaborative project was initiated between the University of Sheffield and the University of York in the UK, and the University of Auckland in New Zealand. Funded by the Worldwide Universities Network (WUN), the team aimed to establish an ethical framework for the responsible integration of AI in manufacturing. Two international workshops facilitated this exchange, taking place in Sheffield in June and Auckland in November 2024, providing platforms for experts and industry professionals to deliberate these pressing issues.

Participants at the Auckland workshop included Chemical and Materials Engineering graduate students and academic staff specialising in process systems engineering, many actively involved in research and teaching using AI tools. In contrast, Sheffield’s workshop attracted researchers, academics, industry professionals and experts from fields including process systems, manufacturing and law, all actively engaged in AI development or use.

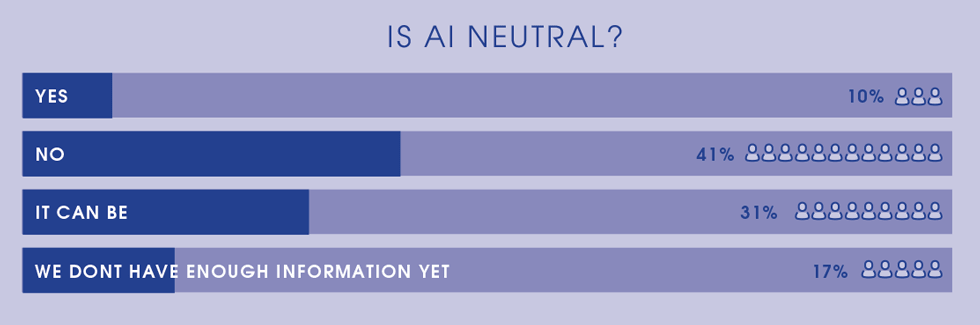

Results from these workshops were shared at ChemEngDay UK in Sheffield in April 2025, highlighting participants’ views on AI neutrality.1 Audience polling revealed that 41% believed AI was not neutral, while 31% indicated it could be neutral, prompting an insightful panel discussion. This underscores the need for integrating ethics training into educational curricula, given the widespread student and academic use of AI.

Is AI neutral?

The key challenges facing manufacturers adopting AI are:

Data quality and availability

Reliable AI models depend on extensive, accurate datasets. However, legacy manufacturing processes, often not even achieving Industry 3.0 standards, result in fragmented data collection systems. Without transitioning to automated, real-time data systems, AI in manufacturing will fail to deliver reliable outcomes because the foundation – accurate data – will not exist.

At the glove factory, manual data collection proved inconsistent and often manipulated. To meet production targets, workers near the end of the third shift manually recorded inflated numbers using tally marks. Human factors, such as fatigue or stress, also led to incorrect data entries, rendering manual records unreliable for AI model training. Additionally, when tasked with minimising leftover rubber latex, manual records of actual versus expected latex usage were frequently falsified, distorting decision-making processes. The experience shows that without accurate, trustworthy data, even the most advanced AI systems will falter, underlining the need for integrated and transparent data practices.

Workforce understanding and cultural challenges

The glove factory incident also underscores the importance of workforce engagement. Fear, misunderstanding and mistrust of AI can manifest as active resistance. While management often views AI and robotics as exciting drivers of efficiency, frontline workers may see them as threats to job security. In Sri Lanka, that tension spilled over when a worker, anxious about losing his job, cut the data cable of a newly delivered robot.

Bridging this divide required more than technical fixes. A participatory “Robotics Team” was formed, involving operators, supervisors and engineers, who were trained in robot installation, operation and troubleshooting. This hands-on approach transformed apprehension into ownership, showing that automation could improve rather than replace their roles. Physically demanding tasks such as manual dipping, lifting and repetitive strain were removed, improving safety and working conditions. At the same time, consistent product quality created opportunities for new product lines and additional jobs.

Crucially, automation did not lead to redundancies. In a context of skilled labour shortages, automating parts of the plant allowed experienced workers to be redeployed to critical operations where their expertise was invaluable. In fact, their tacit knowledge of workarounds informed system design, ensuring automation solved real-world process issues rather than idealised scenarios. For example, robotic precision eliminated the need for manual vibration after glove dipping, a step previously introduced to correct human variability.

The lesson is clear: AI and automation cannot be treated as independent systems replacing human roles. Successful transformation depends on actively involving, empowering and learning from those closest to the processes. It requires cultural adaptation, trust and collaboration as much as it does technical innovation.

Regulatory and training gaps

The current regulatory landscape governing the use of AI in manufacturing remains underdeveloped, lagging significantly behind technological advancements. Regulatory frameworks have struggled to keep pace, leaving manufacturers uncertain about compliance standards, ethical obligations and accountability mechanisms. Similarly, training programs and educational resources for workers are often inadequate, contributing to a widespread lack of understanding and mistrust of AI systems among manufacturing personnel.

This regulatory inadequacy is, admittedly, not limited to manufacturing – similar gaps appear across industries such as healthcare, finance and autonomous systems. However, the absence of robust regulatory precedents in other sectors does not diminish the importance of addressing these gaps in manufacturing; instead, it exacerbates the challenge, as manufacturers have fewer best practices to emulate. Companies must often navigate this terrain independently, emphasising self-governance and internal accountability in the absence of external guidance.

To overcome these challenges, organisations should proactively establish internal standards for ethical and safe AI use, taking inspiration from pioneering efforts already in place. For example, Rolls-Royce introduced the Aletheia Framework,2 designed explicitly to promote transparency, accountability and ethics in AI applications. Initiatives like this serve as practical blueprints, offering valuable insights into establishing internal governance and ethical principles in AI. Manufacturers can adopt similar internal guidelines to bridge regulatory gaps, fostering a responsible AI culture and facilitating the alignment of AI initiatives with organisational values, societal expectations and emerging regulatory standards.

Five practical strategies for ethical AI adoption

Building on lessons from the glove factory and international workshops, the following strategies provide actionable guidance:

1. Know your problem

Define the specific issue AI is intended to solve to avoid unintended consequences

2. Know your data

Understand your data’s origin, diversity, and limitations; biased inputs produces biased outcomes

3. Perform ethical and safety assessments

Regularly evaluate AI systems for ethical and operational risks, ensuring transparency and accountability

4. Trial in low-risk environments

Pilot AI solutions in controlled, low-stakes scenarios to identify and rectify issues before broader implementation

5. Treat AI like an intern

Maintain human oversight, provide continuous feedback and supervise AI decisions closely

Embedding ethics and skills for the future

Ethical AI awareness must begin in education. By equipping future chemical engineers to anticipate risks and integrate safeguards, we ensure that AI adoption strengthens processes, protects people and serves society. Embedding ethics into curricula will prepare graduates not just to deploy AI, but to do so responsibly – driving sustainable transformation instead of short-term gains.

Driving cultural and operational change

For industry leaders, this means setting clear internal standards, safeguarding data integrity and involving the workforce from design to deployment. For chemical engineers, it demands applying the same rigour used in process safety and systems optimisation: defining problems precisely, challenging assumptions and treating ethics as a design constraint, not an afterthought.

The Sri Lankan glove factory reminds us technology alone cannot guarantee success. Trust, culture and engagement are just as vital. By combining technical excellence with ethical responsibility, chemical engineers can ensure that AI becomes a force forvalue, resilience and confidence – not division.

Mo Zandi is a professor of chemical engineering in the School of Chemical, Materials and Biological Engineering at the University of Sheffield. Brent Young is a professor in the Department of Chemical and Materials Engineering at the University of Auckland where Gihan Kuruppu is a PhD candidate

References

1. IChemE EdSIG Newsletter: bit.ly/edsig-newsletter

2. www.rolls-royce.com/innovation/the-aletheia-framework.aspx

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.