History of Nuclear Engineering Part 3: Atoms for Peace

In 1953, Dwight D Eisenhower (1890–1969) began his US presidential term with a widely applauded “Atoms for Peace” address. Martin Pitt reflects on how that worked out

IN 1946, the US announced it wouldn’t be sharing nuclear information, even with their closest allies, forcing Britain to go it alone. So, Christopher Hinton (1901–1983), chief engineer of chemical company Brunner Mond (ICI), was appointed deputy controller of production, atomic energy. Britain’s first nuclear engineer, he was responsible for uranium processing and nuclear power stations, and thus, indirectly, bomb material. Two other deputy controllers were employed for research and weapons design, all under controller of production Lord Portal, of whom Hinton said: “I cannot remember that he ever did anything that helped us.”

In 1949, thanks to information passed on by its spies, the USSR exploded its first bomb, five years ahead of US expectations. On 3 October 1952, Britain staged its first nuclear test – at Montebello Islands off the west coast of Australia – and the RAF had its first atom bombs in 1953. The USSR was kept well-informed of their progress by a clutch of spies.

Apart from bomb material, nuclear reactors produced energy. In 1954, US Atomic Energy Commission chairman Lewis Strauss (1896–1974), said: “It is not too much to expect that our children will enjoy in their homes electrical energy too cheap to meter.”

In 1956, the UK’s Calder Hall reactor at Sellafield was the first in the world to deliver power in commercial quantities – 50 MW from the first of four reactors. This was a breeder reactor, converting non-fissile U-238 into fissile Pu-239 which could be used to give more power. However, its main purpose, in common with similar power stations across the world, was to deliver weapons-grade plutonium despite publicity to the contrary.

Uranium becomes the new oil

The focus on weapons provided the money, but chemical engineers grew expertise in nuclear power, with a host of new challenges and technologies. In addition, reactors provided a range of radioactive elements of different properties which have enabled radiotherapy to be far more effective and precise than the early radium treatments. I have personally benefited from the short-lived isotope iodine-125 being implanted in a tumour. Radioactive sources now provide reliable non-invasive measurements of level, density, and thickness on many industrial plants in nucleonic instruments.

In 1955, at a European Conference on The Functions and Education of the Chemical Engineer in Europe, Hinton said: “Nuclear engineering is, after all, only a branch of chemical engineering.” The same year, he published a description of uranium production in the Transactions of the IChemE, including control of radioactive hazards.1

In 1957, American chemist Manson Benedict (1907–2006) and chemical engineer Thomas H Pigford (1922–2010) published the first textbook on the subject, Nuclear Chemical Engineering. Benedict had worked for MW Kellogg on the gaseous diffusion separation of uranium isotopes and became the first professor of nuclear engineering at MIT. Pigford established a department of nuclear engineering at Berkeley, including a 1 MW reactor for teaching and research. The 1981 edition of Nuclear Chemical Engineering covered the whole fuel cycle from mining uranium through designing the reactor, separating products and waste disposal.

Rickover the dope

Following a meritorious performance in the Second World War, Captain Hyman G Rickover (1900–1986) was appointed leader of a group of US navy engineers sent to Oak Ridge to learn about nuclear reactors, referred to by the physicists as Doctors of Pile Energy or dopes. What Rickover wanted was the simplest and most reliable power source. He achieved this in 1954 with the Westinghouse LSR (light ship reactor) – now called the PWR (pressurised water reactor) – in the first nuclear submarine, the USS Nautilus. In a parallel project he oversaw a GEC sodium-cooled reactor, more commonly known as a liquid metal reactor (LMR), for the second nuclear submarine, USS Seawolf, launched in 1957. Electromagnetic pumps made it virtually silent. The same year, to the delight of Eisenhower, Rickover opened the first commercial nuclear electric plant in the US, a 60 MW PWR built for a cancelled aircraft carrier. The PWR is the most widely used reactor today, on land and sea and the Seawolf was later converted to it. Rickover (pictured, inspecting the USS Nautilus) is now known as the father of the nuclear navy.

The USSR built a fleet of LMR attack submarines, with lead-bismuth cooling, with the disadvantage that the metal had to be heated when out of service to keep liquid. In 1980, the sodium-cooled 600 MW BN-600 started up in Beloyarsk, Russia, and is still providing electricity to the grid. France, Germany, Japan, and the US have had many experimental and a few production LMR reactors.

Chemical engineers grew expertise in nuclear power, with a host of new challenges and technologies. In addition, reactors provided a range of radioactive elements of different properties which have enabled radiotherapy to be far more effective and precise

Heavy water

Heavy water has a significant portion of hydrogen replaced by deuterium or tritium, typically 20 to 95%. It was first prepared in 1933 by American physical chemist Gilbert Newton Lewis (1875–1946) by electrolysis. As hydrogen gas is removed, the heavier isotopes accumulate in the residue. It was later considered as a moderator and coolant in a reactor.

The same year, Norwegian chemical engineer Jomar Brun (1904–1993) proposed to his employer Norsk Hydro that they should make a commercial plant using the abundant hydroelectric power at Ryuken. It started in 1935 and was the only source in Europe, producing 11 l per month for researchers. The hydrogen produced went into Norway’s first ammonia plant. In 1939, with the plant receiving increasing orders from German chemical company IG Farben, the entire world stockpile (200 kg) was spirited away to France, where Russian-born French citizen Lew Kowarski (1907–1979) was working on the possibility of a nuclear reactor. Commonly described as a physicist, he insisted on the title of chemical engineer, having a degree in the subject from the University of Lyon. Meanwhile, Brun had escaped to the UK, and fearing the expanded heavy water plant was wanted for nuclear reactors and thus weapons, assisted in planning commando raids which destroyed it. Both the heavy water and Kowarski moved to England in June 1940 as part of Operation Aerial, after which he joined the Tube Alloys project (see TCE 994).

Another part of the project was the Chalk River Nuclear Laboratory, 100 km from Montreal in Canada, where Kowarski eventually relocated. Here In 1944, construction began of ZEEP (Zero Energy Experimental Pile), the first nuclear reactor outside the US, which went critical in September 1945. ZEEP used heavy water from the Manhattan Project but did not contribute to the bombs. The work of Kowarski and scientific colleagues was so advanced that had it not been for the war, France would probably have had a reactor in 1941. In 1947, Kowarski commissioned the much larger research reactor NRX (up to 54 MW), which operated until 1993, and was the basis for the successful CANDU (CANada Deuterium Uranium) commercial reactors. Most other similar reactors use light water (ie ordinary), but heavy water has greater neutron efficiency, meaning it can use natural uranium instead of needing it enriched with U-235. It can also use the spent fuel (ie low U-235) from other reactors. Kowarski returned to France to build their first two reactors in 1948 and 1952.

The Superbomb

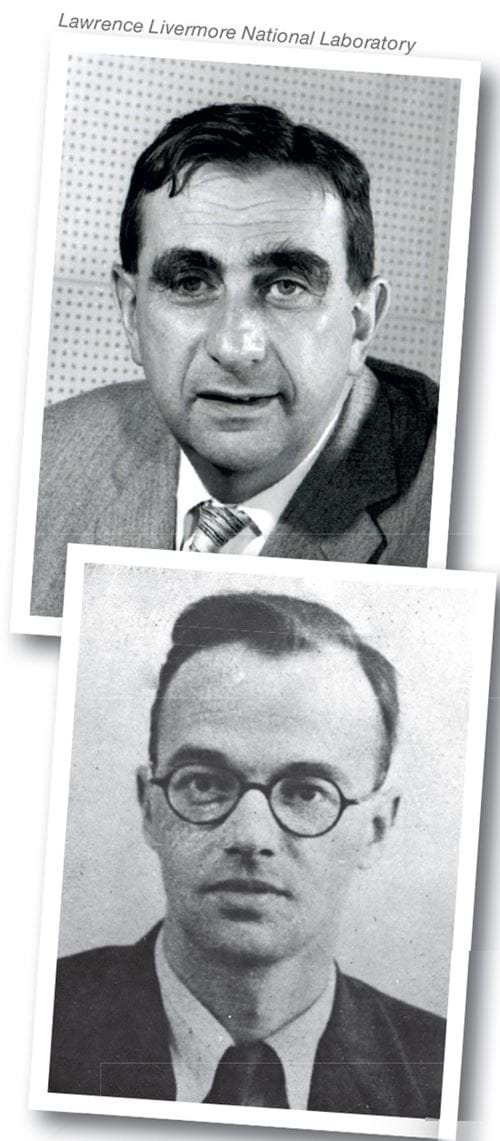

Hungarian chemical engineering graduate and PhD physicist Edward Teller (1908–2003) (pictured top, in 1958) was a Jew who fled the Nazis for the US. To him, heavy water meant something else – the fuel for a superbomb, in which deuterium atoms would give far more energy by fusion. He tried but failed to persuade the Manhattan Project to go straight for this. He was reluctant to do the calculations assigned to him, instead working on his pet idea. He was finally allowed to work on it alone for the last year. The work he did not do was mainly carried out by physicist Klaus Fuchs (1911–1988), (pictured bottom, in 1951), a German communist who had fled the Nazis for England and joined the project as a British contributor and Soviet spy.

A conference in 1946 decided the Superbomb was probably possible, but uncertain. Many hoped it would be impossible.

In 1949, when the USSR exploded their own plutonium bomb, the US realised it had lost its lead. Teller said: “There are only two alternatives: either we make the H-bomb, or we wait until the Russians drop it on us.” In 1952, an explosion 450 times as powerful as the Nagasaki one showed it worked, though it was not a practical bomb, having a mass of 73 tonnes including 20 tonnes of refrigeration plant to keep the deuterium liquid. A change to lithium deuteride produced a transportable one in 1954. The UK, independent again, produced its own in 1957, while France and China also joined the new nuclear club.

During Eisenhower’s presidency (1953–1961) the number of US nuclear weapons rose from 1,000 to 20,000.

Fusion – the future

Uranium hadn’t given us free electricity, but fusion offered new hope. If the power of the H-bomb could be generated more slowly, then deuterium from the sea was a limitless source. In 1947, a pinched plasma to contain the reaction was proposed to the Atomic Energy Research Establishment (AERE) at Harwell by British physics Nobel prize-winner George P Thompson (1892–1975). Harwell director Fuchs dismissed and delayed the ideas, but doubtless passed them on to his Soviet handlers.

Eventually a device at Harwell using this principle, called ZETA (Zero Energy Thermonuclear Assembly), announced success in 1957. Nuclear fusion had been achieved with the generation of neutrons within a machine. Practical power generation might be achieved in ten to 20 years. I well remember the excitement of the newspapers. Free electricity was promised again. Shares in uranium companies dropped like a stone.

Eventually it was admitted that a mistake had been made, and fusion had not been achieved. Today, nuclear fusion reactors are still ten to 20 years away, and the 400-plus nuclear power plants in the world are all fission.

Thorium

This was the only other radioactive natural element known to the Curies. It was identified in 1828 by outstanding Swedish chemist Jöns Jacob Berzelius (1779–1848),2 who named it after Thor, the god of war, and prepared the pure metal in 1829. He noted that the heated oxide gave off an intense white light. This fact was used by Austrian inventor Carl Auer von Welsbach (1858–1929), who in 1889 patented the gas mantle using a synergistic mixture of 99% thorium oxide and 1% cerium oxide, which revolutionised gas lighting. Welsbach also invented the sparking lighter flint and the metal filament electric light bulb with a clever way of making osmium wire. Gas mantles are still used for camping and in rural areas. Ones containing thorium are forbidden or restricted in many countries but are still in extensive use in others.

The Manhattan Project considered thorium but focused on uranium for speed. During the Nazi occupation of France, supplies of thorium oxide for mantles were seized, leading Allied intelligence to conclude they were to be used to make a nuclear bomb. In fact, the plan was to use it after the war to manufacture radioactive toothpaste supposed to kill bacteria and make teeth shine whiter.

Very little happened with thorium for three reasons. 1: Uranium turned out to be more abundant than first thought. 2: There was still a focus on bombs. 3: Rickover. His prestige and forceful personality saw him rise to four-star admiral and dominate US nuclear power for over 30 years. He was not interested in other nuclear fuel cycles, only development of proven technology.

Experimental thorium reactors operated in the 1960s but were largely abandoned until 2002. Interest revived partially due to the reduction in demand for plutonium for weapons, but also the problem of waste treatment and storage. India has the largest thorium stocks in the world, but very little uranium, so is particularly interested. Thorium has been a problem as a waste product from rare metal extraction, since it must be disposed of as radioactive waste. It has different chemical and radiochemical properties from uranium, so should be an interesting area of chemical engineering development over the next decades.

Thorium

Natural thorium is mostly Th-232 which is fissile like U-235 and fertile (can give more fuel) like U-238 but requires a more active source such as U-235 or Pu-239 to initiate the chain reaction. It absorbs neutrons to make Th-233 which decays via Pa-233 to U-233, the fuel. It is much more abundant than uranium and does not give rise to so much long-term waste. Experimental piles using water or gas cooling were built and by 1959 there was the Molten Salt Reactor (MSR). This eliminates the risk of meltdown because the fuel and the moderator are in solution in a mixture of fluoride or chloride salts. Fuel maintenance or replacement can be done by pumping rather than remote handling. Thorium can also be used in a CANDU reactor.

Waste not, want not

Under wartime conditions and then the nuclear arms race, the long-term environmental implications were scarcely considered. Early sites are generally highly contaminated, and remediation is technically difficult and expensive. In addition, solid waste was generally just accumulated on or near the site, with gases and liquids released to the environment. In 1976, the UK Flowers report recommended that further fission sites should not be approved until a satisfactory system of waste disposal is provided. This is still work in progress, and the subject of much controversy. The issues are complex and will require a lot of chemical engineering skill and effort in the future.

In 1993, as part of arms reduction, a 20-year “Megatons to Megawatts” agreement began in which Russia would supply enriched uranium to the US from 20,000 decommissioned weapons, but diluted to reactor level, at a price below that of US companies (which ceased production).

This was completed in 2013, but the US continues to buy 25% of its nuclear fuel from Russia, being exempted from embargo despite the Russia-Ukraine war. On 14 December 2023, a bill was passed to re-establish US uranium enrichment for the sake of nuclear electricity and navy propulsion. In January 2024, the UK government announced £300m (US$377m) for development of UK uranium enrichment.

References

1. C Hinton, (1955) Transactions of the IChemE, Jan 01 “Some aspects of the chemical processes ancillary to atomic energy: the manufacture of uranium metal from ore”

2. https://www.thechemicalengineer.com/features/signs-and-symbols/

Martin Pitt CEng FIChemE is a regular contributor. Read other articles in his history series: https://www.thechemicalengineer.com/tags/chemicalengineering-history

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.