Better Safe Than Sorry (Whatever Safe Is)

Trevor J Hughes on why reducing risk will only come from engineers challenging cringeworthy ‘management speak’ and improving public understanding of consequence

TO BE honest, I was never interested in safety. That sounds like a terrible admission for a chemical engineer who wanted to become a production manager. My ambitions were met. Safety became just one of the umpteen demands on my managerial time.

In mid-career, following the closure of the manufacturing site I had been managing, I had to change the course of my career. I found myself in management consultancy – specialising in safety! Some 20 years later, safety has become my passion, leaving me wanting to do whatever I can to help the industry improve. Six years ago, I set about doing some serious research into what works and what doesn’t in the field of safety. This research is documented in the book Catastrophic Incidents: Prevention and Failure.1

Significant safety incidents

The most influential event under my leadership was a spill. In industry parlance we call it “an excessive deviation from permitted discharge levels of a hazardous and toxic chemical”. The incident happened overnight when the shift had to deal with an unusual process deviation relating to ice fouling of a heat exchanger.

Of course, political parties and the newspapers sensationalised it by reporting the plant as having “dumped” the material. Although there was no measurable environmental damage (even though the spill did reach an estuary), the reputational damage was huge. Instead of focusing my attention on managing the production and costs of the plant I had to focus on managing the fallout from the incident. A shortcut had been taken when dealing with a process anomaly, which resulted in the excessive discharge. I doubt that this was the first time, but it was the first time the discharge went on for so long. When I reflect on the incident, what strikes me most is that several years earlier, working on the same plant as a young development engineer, one of my tasks was yield improvement. Plant supervisors had told me about some abnormal events, such as thawing of the ice in the heat exchanger, and I should have paid more attention. I was focused on big-ticket yield improvements. I had little control over the details when I took a management position.

Some years later, a fatality occurred at a plant which I was subsequently assigned to manage. It does not make me feel better to tell you that the fatality occurred some three weeks before I took over. The due diligence effort made before acquiring the plant the previous year blindsided us on a critical risk. An unapproved time-saving measure had been taken to improve cycle time on a batch process, resulting in low flashpoint material being erroneously used. When I reflect on the incident, I also doubt that this was the first time this method had been adopted; previously, the fatal spark had fortunately not occurred.

In practice, decisions on “What is Safe?” balance the benefit of taking the risk and our perceptions of the risk. I refer to this as a consequence-benefit analysis

Questionable statements on management policy

Let’s move on to what “management” has to say about safety. During my career in management consultancy, I had the opportunity to visit over 100 installations processing hazardous materials of various kinds. The following are statements I frequently came across, all of which are well-meaning but, when you think about it, are of little use in giving direction:

- In this company, we don’t do anything unless it is safe to do so

- Here, everyone has the right to “stop the job” if they observe anything unsafe

- It [safety] either starts at the top, or it doesn’t start at all

Politicians and government officials are prone to making such cringeworthy statements. Take the following, relating to the Covid pandemic:

- We will not ask the teachers to return to work until we are fully satisfied that it is safe for them to do so

These are somewhat useless statements because they assume an absolute standard of safety. It’s not surprising since the public talks about safety in such absolute terms. There is an unavoidable risk when anyone uses a vehicle, no matter how well the vehicle is driven. However, given the benefit of transport, most of us are willing to take that risk.

What is safe? A consequence-benefit analysis

So, in practice, decisions on “What is Safe?” balance the benefit of taking the risk and our perceptions of the risk. I refer to this as a consequence-benefit analysis.

Perceptions of risk are based on perceived consequences (death, life-changing injuries, chronic illnesses such as cancer, and less severe injuries which might result from slips, trips, or falls) and on perceived frequency (frequent, seldom, never heard of it here, never heard of it anywhere, etc).

I remember being so excited when I read Reducing Risks, Protecting People produced by the UK Health and Safety Executive (HSE) in 20012 as a guide to the HSE’s decision-making process. For the first time, here was a sensible approach to recognising and distinguishing between tolerable and intolerable risk.

Set aside for a moment “slips, trips, and falls” risks which can be more readily managed, and consider less visible, insidious risks, such as the spill and fire-related fatality I mentioned earlier from my experience. I challenge that production managers are rarely confronted with acting on such risks because they perceive such risks as:

- very low probability, especially given the typical tenure of a production manager at the same plant (maybe 3–5 years)

- low level of control – the plant is already designed and in production, the production manager “inherits” the risks from his predecessors

- low level of risk ownership – the plant design is “owned” by the corporate body, and the production manager is charged to run it, not to criticise it

Misperception of consequence

Take any major incident, such as Grenfell Tower, Deepwater Horizon, Fukushima Daiichi, etc. Without the benefit of hindsight, who would have had the courage and the credibility to alert management, corporations, and government of the potential consequences? One of the reasons that a response plan to an event such as Deepwater Horizon was not fully developed in advance appears to be that neither the companies nor the government/state authorities involved thought such an event could happen. Indeed, at Grenfell Tower and Fukushima Daiichi the vulnerabilities to a major accident had been raised, but apparently not acted upon, probably because of misperception of the risk.

In my work in safety consultancy, I often need to present high-consequence scenarios to sceptical audiences.

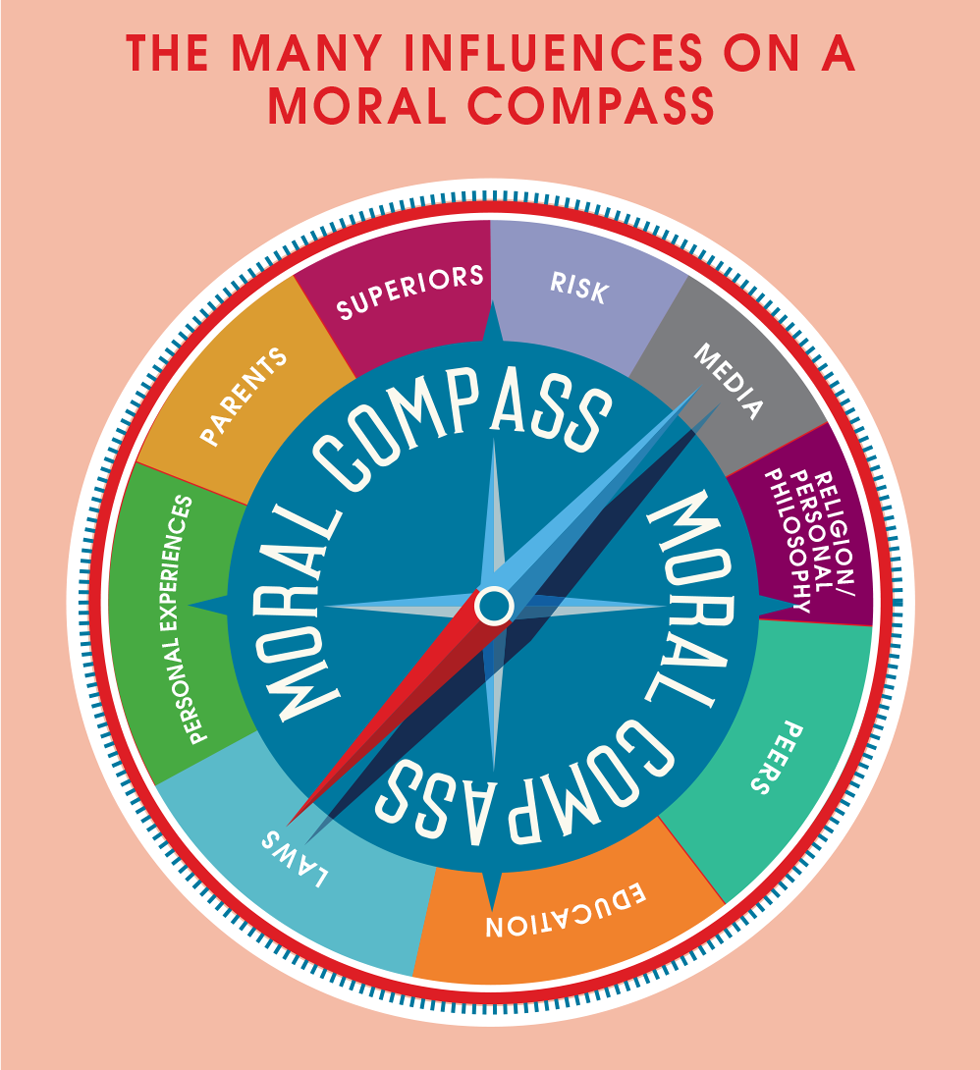

The moral compass

Many, perhaps most, commentators on safety in industry point the finger at management. Quite right, but is it helpful? In Dame Judith Hackitt’s article Did you Sleep Well? for this magazine3 she quotes John Bresland (former chair of the US Chemical Safety Board). He says that “leadership often comes down to making a choice between making decisions that are expedient and what people want to hear versus making decisions that may be career-limiting in the short-term but which will enable you to live with yourself and sleep easy knowing you’ve done the right thing”.

It is a wise comment, but does management typically have a moral compass that influences them accordingly? I suggest that the majority do not. Unless we, as a society, do something to change this, no amount of moral preaching will make a significant difference.

So, do we give up? Absolutely not. There are ways to influence moral compass.

Consider your diligence in segregating waste for recycling now versus some years ago. This has been a moral compass change for us all.

Influencing the moral compass when it comes to safety

Let’s start with children – the formative age for moral determination.

A look at the UK national curriculum shows that the subject of risk is mainly buried in maths sections relating to probability. Risk and probability are not clearly connected. Where it is to be found, it is in the context of rolling dice or other games.

Risk, probability, and consequence topics are appropriate for young people in the 11–18 age group. During this stage of their lives, they will be exposed to, or tempted by, many potential risks including drugs, sex, alcohol, smoking, vaping, and driving a vehicle. If old enough to try these activities, young people are indeed old enough to learn about risk. The objective is not to preach right from wrong, but to allow the young person to make their own informed consequence-benefit analysis.

Tertiary education

Some tertiary studies such as chemical engineering and mechanical engineering are likely to result in the student following a career in high-hazard industries. I was surprised to discover how little process safety or mechanical integrity is taught in undergraduate studies. Process safety is a subject of such vast importance to a chemical engineer’s career, not just in production but also in research, design, and construction. I met with a few university staff from the chemical engineering profession, and they invited me to participate in some university seminars. This experience led me to agree that, while process safety may not be explicitly taught, it is indeed embedded within the design projects as discovery learning. I understand that the IChemE accreditation process puts considerable emphasis on process safety but does not explicitly require the subject to be taught. Embedding the learning within, say, the design project is satisfactory, but to me is not sufficient. Process safety does not lie within the typical line of interest and research of university staff, and this may be at the root of the amount of teaching of the subject.

Public disclosure of risk – safety case

Thinking back to the spill I mentioned earlier in relation to the plant I was managing, I realise just how deeply public perceptions can influence management. An adverse perception can seriously hinder many aspects of a manager’s job. Consider, for example, recruitment, planning applications, attention from regulatory bodies, and the time taken to manage, etc. A positive perception significantly improves such aspects. So, irrespective of the moral compass of a manager, public perceptions can move that compass in the right direction from a safety perspective.

The first proposal here concerns the safety case. Most high-hazard operations in the UK and in the European Union (EU) require the preparation of a safety case by law (originally the Control of Major Accident Hazards or COMAH regulations). In brief, a safety case comprises a description of the risks from the facility, the emergency provisions, and the measures being adopted to prevent a major accident. The document must be approved by the authorities. If the COMAH site significantly changes its process or how it is managed, then the safety case should be revised. It can be regarded as a series of promises the plant operator makes to achieve a licence to operate.

Such promises are not made public. I understand that safety cases were initially intended to be public documents, and I propose they should become so. This could greatly help align the operator’s moral compass. In the event of an incident, a key question could be where the operator’s promises had no longer been met. The argument which caused safety cases to become confidential was that they provided details that terrorists could exploit. This argument needs to be challenged. Terrorist attacks are usually well thought out. High-hazard facilities can be readily identified by sight, with descriptions of their activities on the internet and casual conversations with employees, contractors, and logistics personnel providing plenty of detail without the need to consult a safety case.

The second proposal also involves the safety case concept. I propose that the safety case expectation be adopted by operators of high-hazard facilities throughout the world. I have encountered organisations voluntarily adopting the safety case approach in other countries where law does not require it. It can significantly facilitate relations with the community, planning applications, and government. It would include a holistic set of promises.

Public disclose of risk – annual reporting

All organisations have an annual reporting process and, for most, these annual reports are publicly available through the annual Report and Accounts. Originally purely financial, these reports have developed to include aspects of risk including off-balance sheet issues such as diversity, anti-corruption measures, and environmental protection. In many cases, these off-balance sheet issues are covered in a separate report, for example a sustainability report.

Several organisations, including the Global Reporting Initiative (GRI), work to help businesses communicate their impacts on off-balance sheet issues. These organisations use structured frameworks requiring declarations on various off-balance sheet issues. However, major accident hazards are not part of the current framework.

The declaration of risk is not currently required unless the risk has already been made evident by a catastrophic event. I propose a standard specifically on the subject of the declaration of major accident hazard risk, with companies expected to declare those risks. Should the public not expect such clarity? An example concerns the risk from tailings dams in mine processing operations. Following the Brumadinho dam disaster in 2019, mining companies are now starting to declare tailings dam risks, and this declaration will be part of the imminent GRI standard 14 on mining. However, this is only after the risk has been made visible by such a catastrophic event.

I have encountered organisations voluntarily adopting the safety case approach in other countries where law does not require it. It can significantly facilitate relations with the community, planning applications, and government

Actions you can take

Changing societal culture takes a lot of people pushing in the same direction and could involve:

- asking to see the safety case for your high-hazard site, or sites within your community. It may be withheld, but asking sends a message in itself

- reading the annual reports of companies you are employed by, or who operate within your community. Do they declare their risks? And if not, ask some questions about those risks. Local politicians are likely to be receptive to getting involved

- finding out who, within your company, prepares the annual report on issues relating to risk and sustainability. This might be the company secretary. Ask about major accident risks and how they are reported. The company secretary may welcome some help

- contacting your local university with courses on foundation subjects for the high-hazard industries, such as chemical engineering, mechanical engineering, chemistry, etc. Ask how much of their courses involves process safety and related issues such as asset integrity

- considering whether your company should preferentially recruit people who have studied at educational establishments strong in process safety

Stop asking “Is it safe?” as if this is a black vs white decision. Safety is ultimately a consequence-benefit analysis. If we recognise and adopt this philosophy, safety will become stronger.

References

1. TJ Hughes, Taylor, and Francis, Catastrophic Incidents: Prevention and Failure, 2023

2. Reducing risks, protecting people, Health and Safety Executive, 2001

3. www.thechemicalengineer.com/features/ethics-series-did-you-sleep-well/

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.