Students, educators, and generative AI in peaceful coexistence

Stuart Prescott addresses the strengths and weaknesses of generative AI in an education setting

AS ChatGPT burst onto the scene in 2022, educators worldwide wondered what generative AI (GAI) tools would mean for learning and the assessment of learning. Almost a year on, we know much more about GAI, identifying where it enhances education and training, and where it forces us to rethink current practice.

While some institutions have sought to ban the use of GAI, our approach at the University of New South Wales (UNSW Sydney) has been much more nuanced. We recognise that GAI has value and that learning to use it is a skill that must be developed; a peaceful and productive coexistence is needed, where teachers and students understand the strengths and weaknesses, and can responsibly decide when GAI is appropriate.

Understanding the weaknesses

An important first step in comprehending the weaknesses of GAI, and in particular large language models (LLMs) like ChatGPT, is to understand that they are built around the statistics of word sequences, and the likelihood of sequences appearing together.

LLMs have a range of tuning parameters to control their output, one of which is the “temperature” of the model. As chemical engineers, we can lean on our understanding of thermodynamics and see that temperature is related to information entropy, with higher temperatures increasing the probability of the output containing unlikely combinations of words. When generating text, it is this entropy that gives the writing character, causes synonyms to be used, and means you don’t get the same output every time. When the LLM is acting as an ideation partner, it is these unlikely combinations that are the creative, outside-the-box, or unexpected ideas that stand out among the more predictable suggestions. However, when our desire is for strictly factual output, we still see these unlikely combinations in the output, but they are now perceived as incorrect statements.

Hallucination

When the output from the LLM contains incorrect information, it’s tempting to say “the LLM is lying”. However, attributing human behaviours to LLMs is unhelpful. LLMs have no knowledge of right or wrong, fact or fiction, and so can’t act to intentionally mislead. The phenomenon is instead described as “hallucination”, which is to seriously downplay the problem. In late 2022, Meta (Facebook’s parent company) launched the Galactica LLM which had been trained on a vast quantity of scientific output. Just three days later it was withdrawn because instead of revolutionising the way we do research it was generating pseudoscience and falsehoods. Perhaps the most dangerous aspect of hallucination output is that it remains reasonably well written and very confident. There is no hint of modesty in the output and if you ask, “are you sure about that?” the answer is always affirmative.

Making up citations

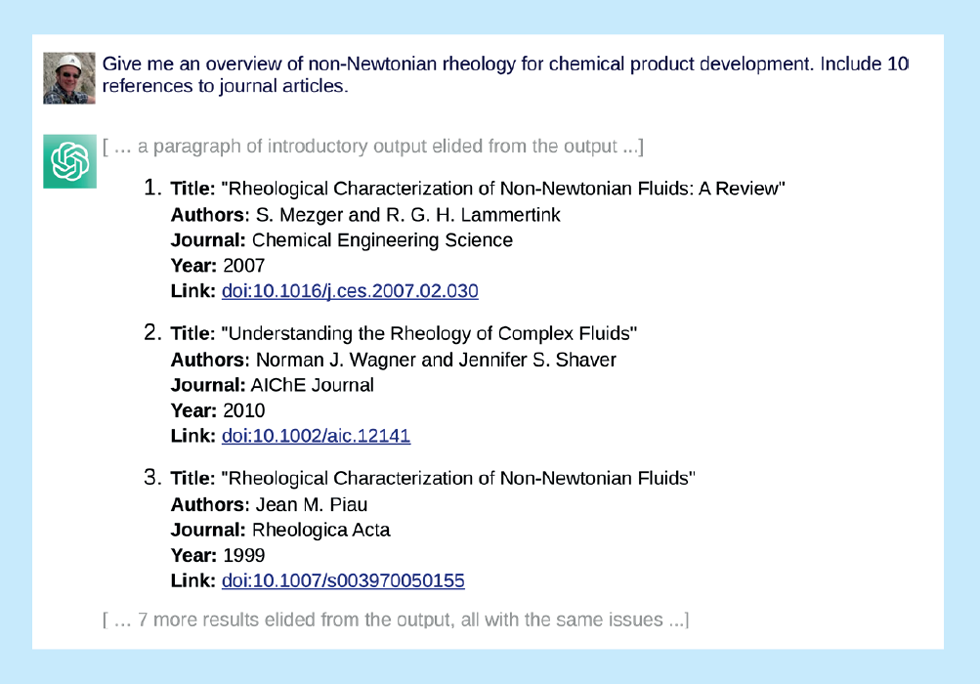

GAI output can include citations. However, they are often merely a collection of words emanating from the LLM’s statistics. Figure 2 shows that beyond superficial plausibility, where fields (title, authors, and journal) may individually seem reasonable, the citation does not exist.

Making up capabilities

Interactive tools like ChatGPT can help the user find ways of using them by making suggestions about their capabilities. ChatGPT suggested to us that it could critique a block flow diagram (BFD) and so we gave it the URL of one. Hundreds of words of critique were generated, including an introductory overview, a set of bullet points to work on, and a concluding sentence. The bullet points ranged from vague to oddly specific with some obviously unrelated to the BFD. Indeed, since we run the webserver for that URL, we know the LLM never downloaded the BFD and had concocted the entire output. What we were given was nothing more than text that conformed to our expectations based on the word statistics of that genre. Even when challenged, the LLM vehemently maintained that it had undertaken the review.

When you need style

As we saw with the BFD review, a strength of GAI is mimicking styles, even if the substance might be lacking.

What does it mean to give feedback – writing to a genre

In one of our master’s level courses, we found many students were unfamiliar with giving peer feedback on assignment tasks; providing direct instruction around content, length and genre has limited success. However, LLMs can critique the writing, offering suggestions about what is missing from it and what can be expanded. Importantly, the LLM has no knowledge of what the student is writing the feedback on; it can’t tell them whether the feedback is appropriate, but it can tell them whether its style matches our expectations and offer hints to improve.

Explain it to me

In our process modelling course, our students use Python to numerically solve sets of differential equations. Some students struggle with the programming aspect and get stuck understanding examples from class. The hosting service we use for the coding (CoCalc.com) added a helpful button to their user interface: Explain This Code. The button starts a conversation with an LLM that asks for an explanation of the code – the LLM is able to integrate information from code, comments, and the context within which the variables are used to provide quite good explanations.

Understanding the limitations of every tool is an important part of education

Educating the educator

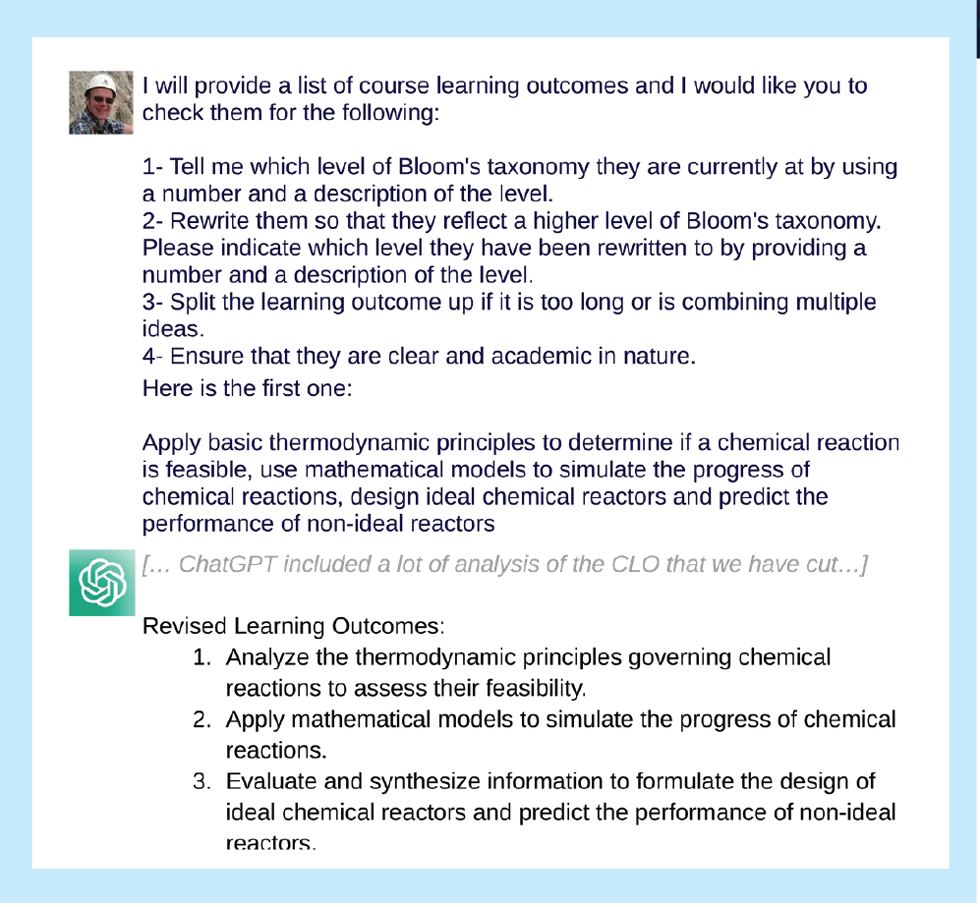

Every training course or university module has learning outcomes that explain its purpose. Writing to the genre can be daunting, with the usual sticking point being finding good verbs to describe the capabilities the students will develop. In working with academics across engineering disciplines, Giordana Orsini (an educational developer in UNSW Faculty of Engineering) developed a set of ChatGPT prompts to take draft learning outcomes, classify them using Bloom’s taxonomy, and offer suggestions for rewording. Figure 3 shows part of the much longer interaction with ChatGPT that takes an old and non-idiomatic learning outcome for a reaction engineering course, identifies that it can be improved by splitting into three, and suggests better verbs.

Responsible use

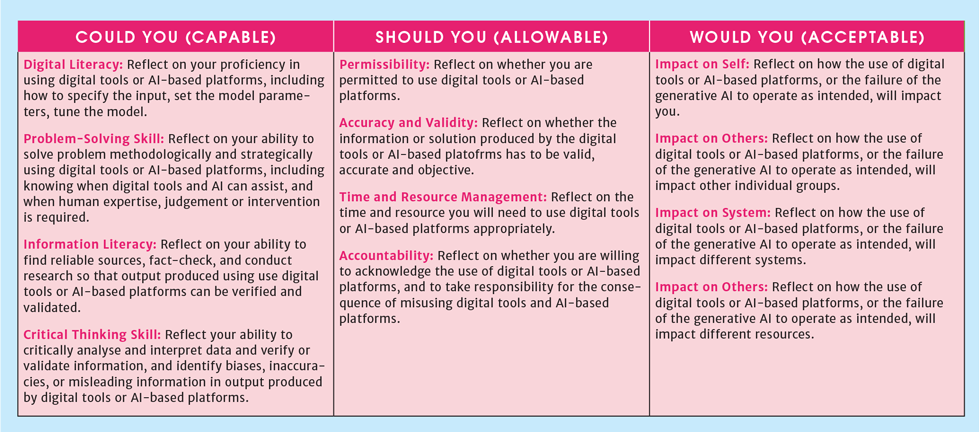

Conversations with our students about GAI have necessarily included academic integrity and the appropriateness of the technology, with details varying according to teaching contexts such as the stage of the degree and the objectives of the module. With our second year chemical engineering and chemical product engineering students, Dr May Lim is asking students to engage with three sets of questions that address:

- Could you use GAI: do you have the skills to use it and to understand its output?

- Should you use GAI: is it permitted based on the nature of the tasks or accountability for the output?

- Would you use GAI: are the impacts on others and the perceptions of AI usage acceptable in this context?

Dr Lim’s “Could You, Should You, Would You” sequence (Figure 1) offers a techno-ethical framework for deciding on the use of GAI for coursework, and in future professional situations. This framework is also applicable to the use of GAI by instructors, particularly in the context of whether GAI-based grading and feedback mechanisms are accurate enough to be trusted and acceptable from the perspective of the students.

Conclusions

Understanding the limitations of every tool is an important part of education and provides the basis for techno-ethical frameworks to make professional decisions about appropriate usage. In encouraging our staff and students to learn how to play to the strengths of GAI, we can avoid the problems of hallucinations, and give them tools to boost productivity. For students, educators and graduates alike, being able to take responsibility for the output of the tool remains key and will be the differentiator between jobs that are vulnerable to GAI and those that are not.

References

1. A more technical discussion of LLMs: bit.ly/462RKVg

2. An exploration of failure modes of LLMs: bit.ly/3Ravi8w

3. Institutional perspective and case studies from UNSW: https://www.education.unsw.edu.au/teaching/educational-innovation/generative-artificial-intelligence-education-teaching

Generative AI to try…

If you want to experiment with AI (though adhere to your employer’s use-policy if doing so on a work machine), there is a long list of programs available. Here are a selection by application:

Text-to-text: Type in a prompt and see what your AI of choice generates. Prompts can be as varied as: what do you need to help me optimise a chemical process?; list known incidents involving hydrogen fluoride; how do I calculate the pressure drop of a carrier gas?; improve this memo I’ve written. Popular services includes ChatGPT and Google Bard.

Text-to-code: There are various services aimed at helping users write code faster, or troubleshoot the code they’ve written. Options covering a wide range of programming languages include GitHub Copilot, Replit Ghostwriter, and ChatGPT (again!)

See the post from @aaronsim on X (formerly known as Twitter), for an extensive list of applications and providers: https://bit.ly/3Zlop6m

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.