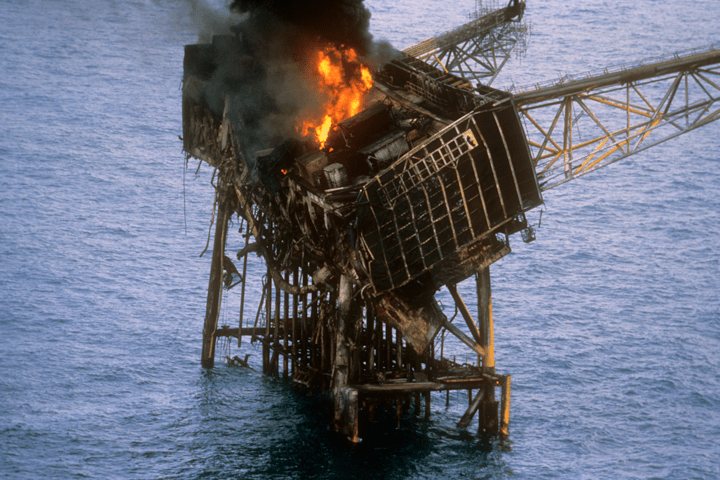

Lord Cullen: Piper Alpha Investigator

Lord Cullen of Whitekirk gave this speech at the opening of Oil & Gas UK’s Safety 30 conference in Aberdeen on 5 June. The conference marked the anniversary of the Piper Alpha disaster, which Lord Cullen investigated on behalf of the government. The 106 recommendations made in his landmark 1990 report reshaped offshore safety culture

THERE is much to be learnt from the reasons for major accidents, by which I mean the underlying factors in particular. Those factors tend to recur, whatever the context, and so they remain highly relevant despite differences in conditions over the course of time.

I am going to speak about one aspect of lessons about safety. When I read reports about major accidents I am struck by the fact that how frequently they have been preceded by signs warning of danger. But those signs were not recognised, or at any rate effectively acted on, to prevent the accidents or limit their extent.

Signs of danger can take a variety of forms. For example there may have been a previous accident or incident, or perhaps a report pointing out danger, or signs of danger in the workplace or encountered in the course of work.

To illustrate this I am going to talk about a number of accidents – both offshore and onshore since they have much in common. They are certainly not an exhaustive list. I am not going to give a full analysis, but rather to use them to illustrate failures to heed the signs of danger, failures ranging from the boardroom to the workplace.

A previous accident or incident

I take first a previous accident or incident. Two points seem to me to be of fundamental importance.

First, it is essential to find out, not only what precipitated the event, but also the factors underlying it. It would be perilous to ignore the latter since they may give rise to a future accident or exacerbate its consequences. The board which investigated the Columbia space shuttle disaster in 2003 said:

“When causal chains are limited to technical flaws and individual failures, the ensuing responses aimed at preventing a similar event in the future are equally limited: they aim to fix the technical problem and replace or retrain the individual responsible. Such corrections lead to a misguided and potentially disastrous belief that the underlying problem has been solved.”1

Secondly, it is not much good having an investigation if it does not lead to a lasting improvement in safety, in other words its results being embedded in the assessment and control of risk, and reflected in the way in which work is tackled and done.

This brings me to Piper Alpha. On the night of the disaster in 1988 operatives tried to start up a spare pump for re-injecting condensate into an export oil line. Unknown to them a relief valve had been removed from the pump’s delivery pipe and in its place was a blind flange which was not leak tight. Condensate escaped and readily found a source of ignition. The explosion blew down the wall next to where crude oil was extracted, and there was a large oil fire. That was the start of the chain of events which led to the disaster.

Why did the operatives not know the position? That was due to a lack of communication of information at shift handover, and deficiencies in the permit to work procedure.

Now, nine months before the disaster a rigger had been killed. The accident had been due to the night shift improvising in the course of a lifting job without an additional permit to work, and to a lack of information from the day shift. A board of inquiry investigated the accident, but its report did not examine the adequacy or quality of the handover between maintenance lead hands. Some actions were taken but they had no lasting effect on practice2. Management failed to recognise the shortcomings in the permit system and the handover practice. Whether by direction or inaction they failed to use the circumstances of particular incidents to drive home the lessons of incidents to those who were immediately responsible for safety on a day-to-day basis3. There was no laid-down procedure for handovers and little, if any, monitoring of them. As for the permit to work system, it was, in my words, “being operated routinely in a casual and unsafe manner”.

I take another example from onshore. The disastrous fire at King’s Cross Underground Station in 1987 was triggered by a passenger dropping a lighted match through a wooden escalator. But a contributory factor was the failure of London Underground to carry through proposals which had followed earlier fires, such as improvements in the cleaning of the running tracks and the replacement of wood with metal. It appears that no one ‘owned’ the recommendations and accepted responsibility for seeing that they were properly considered at the appropriate level. The chairman of the investigation said: “There was no incentive for those conducting them to pursue their findings or recommendations, or by others to translate them into action.” So these recommendations got lost. The directors of London Underground subscribed to the “received wisdom” that fires on the underground were “an occupational hazard4.

Reports about danger

Sometimes the warning signs are in reports about danger. I return to the Piper Alpha disaster. The initial explosion and fire were followed within an hour and a half by the rupture of a number of gas risers which had been routed through the platform. This led to the loss of normal means of evacuation, the destruction of the platform and huge loss of life. There were no subsea isolation valves.

Management should, of course, be alert to safety concerns voiced by members of the workforce, and indeed should be inquisitive about their experiences. There should be a reporting culture.

The management could have been in no doubt as to the grave consequences to the platform and its personnel in the event of a prolonged high pressure gas fire. A report from consultants in 1986 had advised that such a fire would be almost impossible to fight and the gas pipelines would take hours to depressurise. Earlier in 1988 a report by the facilities engineering manager advised that if a fire was fed from a large hydrocarbon inventory, structural integrity could be lost within 10–15 minutes. A report in 1987 by a member of the loss prevention department pointed to an even greater hazard to personnel and plans for platform abandonment. In the event, these reports predicted what happened on the night of the disaster.

Management did not accept that this would happen. They had rejected the installation of sub-sea isolation valves and the fireproofing of structural members as impractical. They had relied on emergency shutdown valves and a limited deluge system, both of which were rendered useless by the initial explosion. They had not carried out a systematic identification and assessment of the potential hazards, or put in place adequate measures for controlling them, but had relied on merely a qualitative opinion. It showed, in my view, a dangerously superficial approach.5

Management should, of course, be alert to safety concerns voiced by members of the workforce, and indeed should be inquisitive about their experiences. There should be a reporting culture.

The roll-on/roll-off ferry The Herald of Free Enterprise sank in 1987 soon after leaving Zeebrugge, with the loss of 186 passengers and crew. It sank because the large inner and outer doors for vehicles had been left open. After other ships in the fleet had sailed with their bow doors open, captains had asked for indicator lights to be fitted. The head office managers had ignored these requests and treated them with derision. The directors had failed to take action on an official recommendation that a person onshore should be designated to monitor the technical and safety aspects of the operations of the vessels, an attitude described, in the words of the Court of Inquiry as revealing “a staggering complacency”.6 After the sinking, indicator lights were fitted in the remaining ships. That took just a few days.

Signs of danger in the workplace or in the course of work

I now turn to cases in which the signs of danger were in the workplace, or encountered in the course of work. However, due to attitudes to them, they were not recognised or acted on.

The explosion at the Texas City Oil Refinery in 2005 happened when vapour arising from an overflow from a raffinate splitter ignited. The investigation of this explosion revealed a large number of deficiencies in the management of process safety. Among the factors which BP found to be underlying what happened was a poor level of hazard awareness and understanding of process safety on the site, which led to people accepting levels of risk that were considerably higher than with comparable installations. One example of this was the placing of temporary trailers with personnel in close proximity to the hydrocarbon processing units. This had grown by custom and practice, but it exacerbated the effect of the explosion. There had been a number of fires on the site which were not investigated. The general reaction of the workforce to them appeared to be that there was nothing to worry about, as they were a fact of life.7

At the oil storage depot at Buncefield in England later in the same year (2005) a sticking gauge and an inoperable high level switch led to an overflow of petrol, the ignition of a vapour cloud and a massive explosion and fire. There had been a lack of adequate response to the fact that the gauge had been sticking, and a lack of understanding about the switch. Various pressures had created a culture where keeping the process operating was the primary focus and process safety did not get the attention, resources or priority that it required. The official investigation of the incident found that there were clear signs that the equipment was not fit for purpose but that no one questioned why, or what should be done about it other than ensure a series of temporary fixes8.

Then there are cases where what are in fact signs of danger have been treated as just “normal”. People may become so accustomed to things happening that they do not recognise them as dangerous.

This brings me back to the Columbia space shuttle disaster (2003), which happened during re-entry to the earth’s atmosphere. During take-off a piece of insulation foam had broken off and struck the orbiter, causing a loss of thermal protection. The investigating board found that over the course of 22 years, foam strikes had been, in their words, “normalised to the point where they were simply a ‘maintenance’ issue – a concern that did not threaten a missionʼs success”. Even after it was clear from the launch videos that foam had struck the orbiter in a manner never before seen, the space shuttle programme managers were not unduly alarmed. They could not imagine why anyone would want a photo of something that could be fixed after landing. The board remarked that learned attitudes about foam strikes had diminished wariness of their danger. The managers were, in their words, “convinced, without study, that nothing could be done about such an emergency. The intellectual curiosity and scepticism that a solid safety culture requires was almost entirely absent9.

The leaking of dissolver product liquor at Thorp at Sellafield from 2004 originated in design changes. However, investigation of the accident showed that an underlying explanation was the culture within the the plant which condoned the ignoring of alarms, non-compliance with some key operating instructions, and safety related equipment not being kept in effective working order for some time, so this had become the norm. In addition there appeared to be an absence of a questioning attitude. For example, even where the evidence from the accountancy data indicated something untoward, the possibility of a leak did not appear to be a credible explanation until the evidence was incontrovertible10.

These cases show how attitudes to signs of danger require vigilance in the management of safety. Here is another example of the way in which human attitudes to signs of danger can affect safety. In various fields of activity people have to be trained in the skill of correctly interpreting what they see. Take, for example, a doctor, a scientist or an archaeologist. That skill includes guarding against treating assumption as fact. People who are responsible for working safely may be tempted to prefer a harmless explanation for what are in fact signs of danger.

This brings me to the disastrous blowout on Deepwater Horizon at the Macondo well in 2010. Much has been written about it and I do not propose to add another volume to the library, but to refer to the stage at which drillers tested the integrity of the well.

Prior to this the engineers who had undertaken the plugging of the well with cement declared it a success because they had “full returns”. The plug might have failed for various reasons11, but they assumed that the integrity test would show whether the well was sealed, and so they did not carry out an evaluation of the cement job.

To carry out the integrity test the drillers had to displace the heavy drilling fluid with a column of seawater in the drill pipe to reduce the pressure inside the well to below the pressure outside it. Then they were to bleed off fluid at the rig, close the drill pipe when the pressure fell to zero, and monitor the well to determine whether any hydrocarbons were leaking into it. But they had repeated difficulty with bleeding off and reducing the pressure. After the third attempt, the drill pipe pressure rose to 1,400 psi, a clear indication that the integrity test had failed12. But they dismissed it. In the words of Chief Counsel to the National Commission into the disaster: “It appears they began with the assumption that the cement job had been successful and kept running tests and proposing explanations until they convinced themselves that their assumption was correct.”13 Thus they normalised signs of danger. They did not realise the vital importance of the integrity test, but regarded it as just a routine activity to confirm the success of the cement job. So things could move ahead, and one of the most important defences against a blowout failed.

Concluding comments

If I now draw these examples together, what are they telling us?

In some cases there was an investigation, but it was limited in scope or superficial; or its results were not driven home to forestall further trouble. In the other cases there was no form of investigation: the signs did not give rise to concern, or even curiosity. Some of the signs were treated as commonplace or misread as innocuous. So there was no corrective or protective action.

What underlay these attitudes to safety? Well, you can readily judge for yourselves. But, for my part, I see at least three factors:

- poor safety awareness, a pre-requisite of which is competence for the purpose;

- failure to give priority to safety; and

- failure to show, or instil in others, responsibility for identifying and resolving safety issues.

Lest you think that in any of these cases there was no more than human error, I must say that the attitudes, practices and values of members of a workforce are often shaped by the tone set by management. But that is another chapter in the book of lessons!

I wish success for this conference. There is much to be learnt from the experiences of others.

The Commission which investigated the accident at Three Mile Island coined the phrase “an absorbing concern with safety14. So should it be with us.

We have added fresh perspectives each day in the run up to the 30th anniversary of the Piper Alpha tragedy. Read the rest of the series here.

References

- Columbia Accident investigation Board Report (2003), volume 1, page 177

- Piper Alpha Report, paras.11.5–11.16

- Ib, para.14.34

- Sir Desmond Fennel: Investigation into the King’s Cross Underground Fire, pages 31, 117, 118

- Piper Alpha Report, paras 14.19–14.24

- MV Herald of Free Enterprise: Report of Court no 8074, paras 14.1, 14.2, 16.1

- Lessons from Texas City: a Case History, Michael P Broadribb, Director, process safety, BP International

- Competent Authority Management Group, Buncefield: Why did it happen? Executive summary

- Columbia Accident Investigation Board Report, 2003, volume 1, page 181

- Report of the investigation into the leak of dissolver product liquor at the Thermal Oxide Reprocessing Plant (THORP), Sellafield, HSE, 2005, paras 127–128

- Macondo: The Gulf Oil Disaster. National Commission on the BP Deepwater Horizon Oil Spill and Offshore Drilling, Chief Counsel’s Report 2011, page 90

- ib, pages 146–148

- ib, page 161

- The President’s Commission on the Accident at Three Mile Island, 1979 (The Kemeny Commision), Overview, page 8

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.