Conor Crowley: Process Safety Consultant

People often say they remember where they were when they heard President Kennedy was shot, when Princess Diana died, or when the two planes hit the twin towers on 9/11. For me, the Piper Alpha disaster was not one of those moments.

I HAD just finished my first year in college, 30 years ago, when the disaster happened. I don’t recall too much about it. Looking back at the Irish Times, I saw that it was front-page news for two or three days, until terrorists took over a liner in the Aegean Sea, and the news agenda moved on.

It wasn’t until November 1990, when I attended a conference in The Hague, organised to celebrate 100 years of chemical engineering at Delft University, that I really got my first introduction to Piper Alpha. One of the survivors, Ed Punchard, told the story of the accident, showing pictures of the development of the fires and explosions, and where he would have been in each of the photos. I don’t recall much of the detail of the actual presentation, other than the sheer scale of the incident compared to the people onboard, the measured anger in the speaker that such an event was allowed to happen, and his conviction that talking about it would be a good way to make sure it didn’t happen again.

In March 1991, I found myself in Aberdeen for a safety engineer job interview with Marathon Oil, and joined the company in September, on the day it began its safety case project. For the next two years, it was a whirlwind of getting approaches together, starting risk assessment and quantitative risk analysis in an industry where it had not been widely applied before, systematically auditing key safety systems (which would in turn become the “safety critical elements” that we could write performance standards for). We also carried out a full hazard and operability (HAZOP) study of two platforms, where I alternated as safety engineering representative and scribe, all the while supporting the ongoing operations of two complex facilities, Brae Alpha and Brae Bravo.

As a young engineer it was an amazing opportunity. I was given as much support as I needed, but encouraged to get after and do as much as I could to make the assessments work. We did the vast majority of the work in-house, as a small, tightly-knit team. We assessed, checked and explained the risks we were facing and did a major part of the work in getting our first safety cases accepted. In all of this, I was helped by a range of mentors and experts who could take a company on the journey from safety case sceptics to safety case acceptance in two short years.

Community scars

Those who were in Aberdeen when Piper happened told us the stories of what it was like, and they often described the night of 6 July in terms of the noise of the helicopters flying in and out of Aberdeen Royal Infirmary, through the night and beyond. Theirs was a level of shock that I think could only be appreciated if you had been there. But the memories and the scar it left on the community, both local and the wider oil industry, is visible to this day.

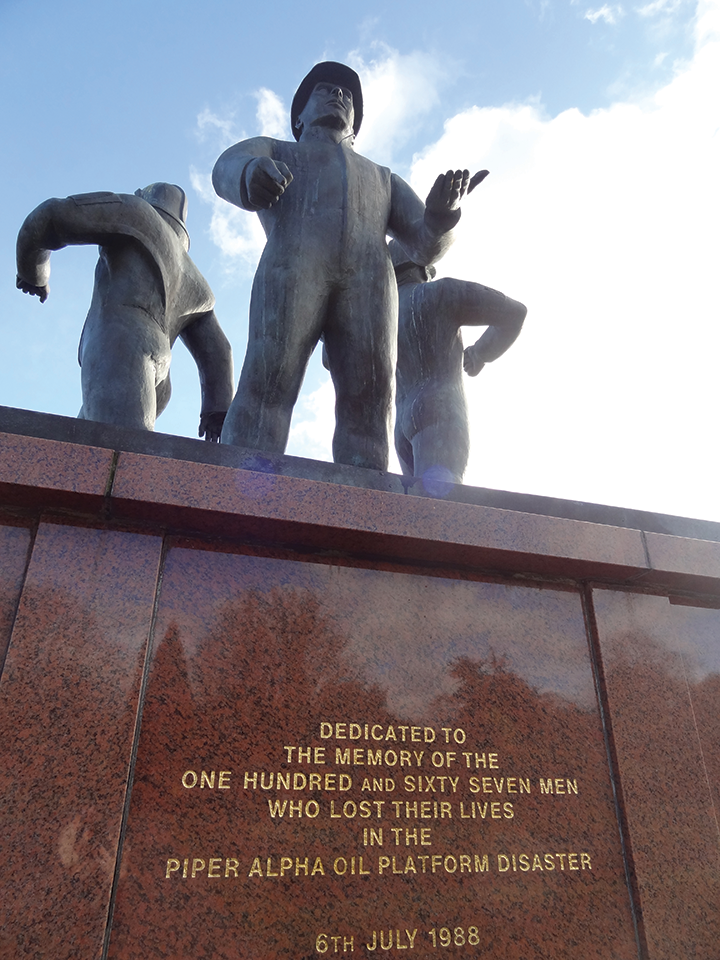

To my knowledge I’ve not personally met a survivor of Piper Alpha. However, on Brae Bravo, I met and worked with the brother of one of the victims, a fire and gas technician. He didn’t make any big fuss about it, but would always show up at every drill with his full kit, grab bag, and provided us an example of how to take these things seriously. He had been on Claymore, and watched Piper go up in flames, all the while they were still pumping oil into the inferno. How that must have felt, and how he could get back onto a helicopter to work offshore I will never know. What I do know is that when we visit the memorial in Aberdeen’s Hazlehead Park, his brother is among the names of those whose bodies were never recovered.

The case for safety

Once the safety cases were delivered, the job didn’t stop. We had an incident where we didn’t control the inhibits on the control system well, leading to a “day of the black rain”. For me, that led to a six-month tour offshore, helping the operators risk assess each override, understand what might be the cause, and develop the additional risk reduction measures that would be required. It gave me a valuable insight into the normal operation of the plants, the day-to-day hazards being faced, and the difference between what we might assume an operator could do, and what could actually be done.

Projects also took up a big part of our time. Subsea tie-backs were in their infancy, and I was tasked with being the technical representative for Marathon on the first ever subsea high integrity protection system (HIPS) on the Shell Kingfisher project, which was developed over Brae ‘B’. This involved us using the kinds of thought processes which are now embedded in standards on instrumented protective systems such as safety integrity levels and layers of protection analysis, to come up with a reliable solution in a remote and difficult location. Helping our American colleagues on a design by assessing the fire and explosion risk for a facility designed to be ice-bound for much of the year gave me an opportunity to see how our approaches would play out in different regions, and fundamentally influenced the design approach taken.

After five years with Marathon, I decided I wanted to do more process engineering, and after a short interval with a detailed design contractor, I joined an Aberdeen process engineering consultancy, (which is now part of Atkins/SNC Lavalin), where I’ve had now had more than 20 years of service. This has been an interesting experience, working all over the world while based in Aberdeen, and helping clients, especially where the answers were not straightforward, where the risks were not easy to remove, and where the drive to deliver may not always have allowed enough time for mature reflection on every issue. From a risk point of view, I’ve ended up navigating what a former colleague calls “the land of the grey area”.

Talking the talk

When I talk to our graduates these days about Piper, it’s a little bit like talking about the Battle of the Somme: it’s hard to believe that things were really like that back in the day, and with the benefit of hindsight, it might be easy to conclude that the way the facility was run was just a disaster waiting to happen. But at Marathon, we had to invest a large amount of cash in blast-walls on our facilities, as, like on Piper Alpha, we also had compression modules directly beside our accommodation block. Our enclosed modules had started with the design intent of providing sufficient vent area to reduce any explosion overpressure sufficiently, but that intent was not delivered in practice. We might have told ourselves that we had robust practices in place to control our risk, but whether that was in fact true was something we worked on improving, as improvements were there to be made.

Looking back, I am always aware that while we didn’t know everything leaving college, we had been well groomed in a way of tackling problems, and with the support of genuine thoughtful experts, both in our company and across our industry, we achieved a huge amount in moving the industry to a new place in safety.

It’s hard to believe that things were really like that back in the day, and with the benefit of hindsight, it might be easy to conclude that the way the facility was run was just a disaster waiting to happen.

Where are we now?

But where are we now? Thirty years on, we haven’t suffered a similar disaster in the UK, but other accidents continue to happen around the world. And while modern design approaches should arguably allow us to avoid the known mistakes of the past, they don’t make us immune to the mistakes of the present or the future. Those mentors and thought leaders who shaped our industry have retired, and sadly many are no longer with us: the baton has been passed on to us. I know we must be always conscious of the optimism bias that bad things will happen to other people, in other places, not to us or our colleagues, or our companies. And our plants remain complex, with many ways to surprise us and catch us out: the onward march of technologies makes this more true each year.

And there are plenty of warnings out there. When Chris Flint, the HSE's director of energy division, took the step to write to all operators about his concerns on the number of large releases in the industry, and history has shown that major incidents in oil and gas tend to lag around two years behind the low-point on the oil-price curve (see the Marsh Report, Figure 21), there is simply no room for complacency. I listened the other day to Drew Rae’s “DisasterCast” podcast on Piper Alpha, and he points out that back then the people in the industry thought they were taking safety seriously, and never expected a Piper Alpha disaster to happen. I’m sure today we also think we are taking things seriously, but we can’t guarantee we are not also wrong.

But I’m not pessimistic about the future of the industry. With increases in computer power, and reducing software costs, we are now at the stage that more advanced computational fluid dynamics (CFD) tools are effectively the same price as simpler, cruder, consequence models. The simplifications we once needed to make to allow problems to be computable are no longer required. The ability to run many more cases, and generate genuine understanding of the performance of our complex facilities, backed up by the actual data of how they perform in real life, is a rich seam of knowledge we need to tap into. Dame Judith Hackitt often has pointed out that there are no new accidents, just old accidents happening to new people. Where we have gaps in our understanding of how accidents could happen, we should be closely watching and following what other industries are doing: for instance, systems safety has matured significantly in the aviation industry, but is not as well advanced elsewhere. And chemical engineers, with their fundamental ability to understand how fluids interact, how reactions happen, how energy transfers and how breaking things down into unit operations allows complicated systems to be assessed more easily, are well placed to help with this.

Piper Alpha was a truly horrific accident, a disaster in every sense of the word. But it was a complex event, with many interlinked causes and issues, and we risk over-simplifying at our peril. I’ve heard people argue that it was the incident where the survivors disobeyed their procedures, as if this is proof that going against procedures is OK. To me, it was those lucky enough to have been outside the accommodation, and with the situational awareness that the accommodation was not a safe option, that survived as a result.

The offshore oil and gas business is an industry where many engineers can’t easily walk onto the plant and see what’s going on, and in fact are often removed in time and distance from the risks we are influencing. And while the incident happened offshore, many of the failings in design and operation that led to it happened onshore, with design failings, operational failings and complacency. If we ask ourselves whether we are immune to these nowadays, what would our answer be?

Reference

1. The Marsh Report, “The 100 Largest Losses, 1978–2017, Large Hydrocarbon Losses in the Hydrocarbon Industries”, 25th Edition, March 2018.

We have added fresh perspectives each day in the run up to the 30th anniversary of the Piper Alpha tragedy. Read the rest of the series here.

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.