A Tool for Learning: Classroom Use-cases for Generative AI

Christopher Honig, Shannon Rios and Eduardo Oliveira discuss three ways that educators can apply generative AI to their teaching methods

IN recent months the world has witnessed the inexorable rise of an exciting new technology: generative AI. This has most clearly been embodied by the explosive growth of ChatGPT (developed by OpenAI) which grew to 100m registered users within its first two months of launch (the fastest growing user-base of any software in history). ChatGPT and other generative AI tools promise to revolutionise the way we learn, communicate, and work.

In education, the discussion has been dominated by AI’s threat to academic integrity and its potential for misuse, however we think a much more interesting discussion is its potential to improve teaching and learning, and better prepare students for the AI-driven future. In this article we will share three uses of generative AI that educators could use to help students generate ideas, review their own engineering coursework, and understand the limitations of AI, but first let’s take a closer look at generative AI and what it is capable of.

Generative AI refers to a subset of artificial intelligence models that can generate data, such as text, images, or even music when given a prompt. These models are trained on vast datasets to identify patterns and relationships within the data, enabling them to generate a contextually relevant and unique output based on the input prompt. In general, the more data these models are exposed to, the better they become at generating accurate and nuanced responses, effectively simulating human-like creativity and problem-solving abilities. As a result, generative AI is transforming the way we create content, develop ideas, and interact with digital environments, opening up a world of possibilities across various domains, including education, art, communication, and engineering.

The landscape is shifting quickly, and generative AI holds great potential for revolutionising the way we learn

What’s in a prompt?

A prompt is the primary means of interacting with generative AI models and understanding the basics of prompt generation is essential to getting valuable and usable outputs from them. A prompt, typically a text statement, guides the AI in generating a desired output. At the moment, crafting clear and specific prompts (often called prompt engineering) is crucial for accurate and relevant outputs, especially when seeking complex or multi-faceted outputs. As generative AI continues to evolve, we can anticipate this process to become even more seamless and intuitive for users.

Generative AI as an ideation assistant

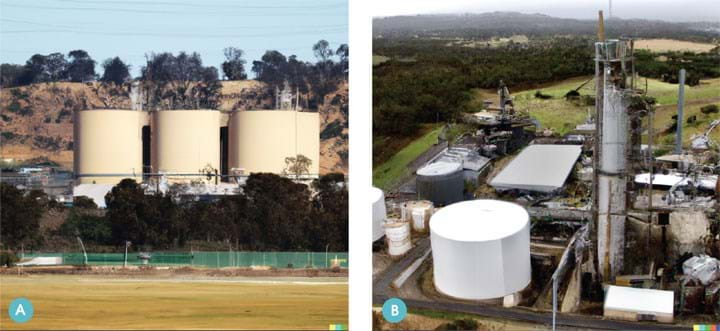

Ideation can be an important stage early in a design process, however students rarely engage with it authentically and often fall into one of many pitfalls. These pitfalls include fixating on the first idea, having a preconceived notion of what a good solution should look like, and being reluctant to share ideas due to anxiety, embarrassment, or having strong ownership of ideas. On the other hand, the ability of ChatGPT and other generative AI tools to create highly specified new content quickly makes it a useful tool for ideation, both for generating alternative ideas and in avoiding some of these ideation pitfalls. This can be used for conceptual work, marketing, strategy development, and many other use-cases. A chemical engineering example might be to generate a conceptual image of the visual impact of a processing plant on the surrounding landscape, quickly and cheaply in early-stage stakeholder engagement (Figure 1).

One classroom use-case is to mirror this emerging practice and ask students to start their design project with a generative AI-powered ideation stage. In ideation, students table a range of possible solutions to an engineering problem and heuristically narrow them down to a few options that may progress to more costly quantitative review (eg feasibility study, process design review). Here, AI would help power the ideation activity with the following steps:

- students use AI to generate a list of possible solutions;

- students learn how to use ChatGPT in their context;

- students generate five possible solutions to the engineering problem using ChatGPT; and

- students discuss the generated ideas in a group. Then each group presents their perceived “best” idea to the rest of the class.

This process could be easily adapted to use AI image generators instead of ChatGPT if necessary. The purpose of this activity is three-fold:

- It exposes students to the potential of generative AI in their work.

- Students develop their ideation skills.

- By incorporating generative AI into the process this way, many of the ideation challenges students faced can be minimised.

The activity could be adapted to focus on critical analysis of AI generated content, or on how to give and receive feedback on conceptual work. The activity itself could be varied in several ways, such as by having the AI generate all of the ideas, having the AI critique ideas generated by students, and many more.

There are, however, a range of ethical issues that arise in the use of generative AI, and educators can help frame these for students. In a chemical engineering context, these issues could include:

Bias

Generative AI models mirror the perspectives of the data used to train them. The ideation activity can work well for objective, fact-based content (eg possible environmental implications of a project) but can be limited in more subjective, socially specific contexts (eg the ethics of tackling the environmental implications).

Ownership

As generative AI tools are trained on large data sets, ownership of the output is complex and part of an ongoing debate, particularly in copyrighted and commercial contexts.

Accuracy

Large language models for generative AI attempt to sequence words in the most appropriate way, but don’t always return accurate results (they don’t have an underlying physical scientific model). Future engineering might place more emphasis on reviewing content, rather than generating new content.

Abuse

Generative AI could be misused to generate fake news or “deepfakes” (false videos or images of public identities) as well as to short-circuit student learning outcomes through academic misconduct.

ChatGPT-assisted code review

Peer code review is a useful practice when coding is employed in open source and industrial contexts and involves manual inspection of source code by programmers other than the original author. Although research shows evidence that code review improves the final product quality3,4, this process is not always welcomed by engineers as it can be time consuming. Additionally, in academia there are several other challenges including: (i) not all students feel comfortable about sharing (or exposing) their source codes with others (reviewers); (ii) not all students believe they’re experienced or knowledgeable enough to evaluate source code from others; and (iii) students don’t see much value in the adoption of the code review processes in academic environments.

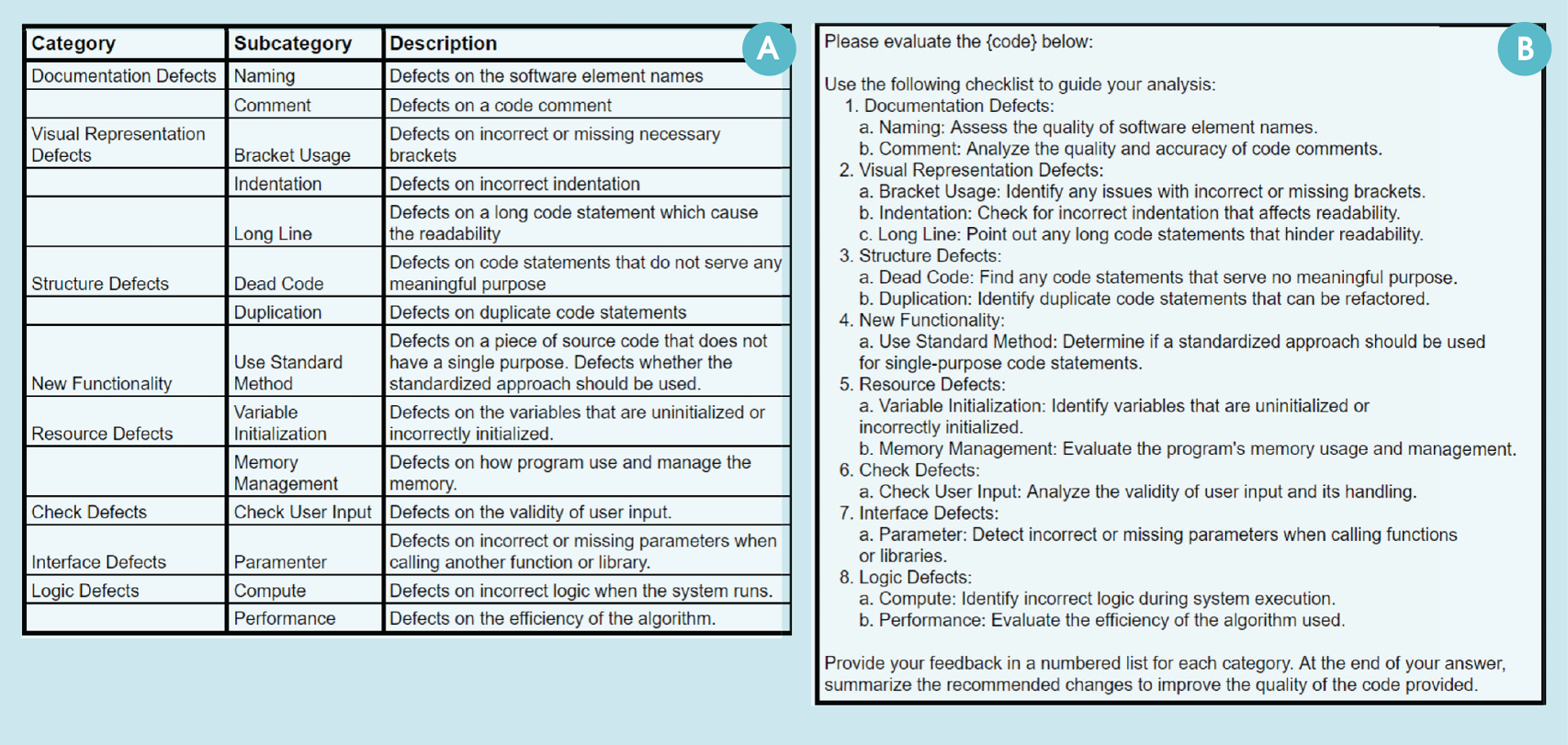

In this context, we designed an asynchronous, ChatGPT-assisted code review process for software engineering students at The University of Melbourne. This process is automated by using the ChatGPT API with a detailed prompt (Figure 2b) and integrated it into the student’s existing workflow. By doing this we can now teach our engineering students how to reflect and evaluate their code based on a research-informed code review checklist (Figure 2a).

This new code review approach may play a key role in improving the design quality and maintainability of a software; offering potential to minimise challenges in the adoption of the code review process in academic environments; and promoting AI literacy among engineering students. Here, AI literacy is understood as “a set of competencies that enables individuals to critically evaluate AI technologies, communicate and collaborate effectively with AI, and use AI as a tool online, at home, and in the workplace”2. Students were made aware they should not use ChatGPT in the review of confidential code nor should they share private information in their prompts (code with personal comments or data from real users). Students were also encouraged to critically review received feedback from ChatGPT. Initial student feedback has been positive, but a more comprehensive analysis of the learning outcomes is part of an ongoing study.

Rather than finding ANSWERS, this activity frames the AI as an unreliable participant, and students are primed to search for ERRORS

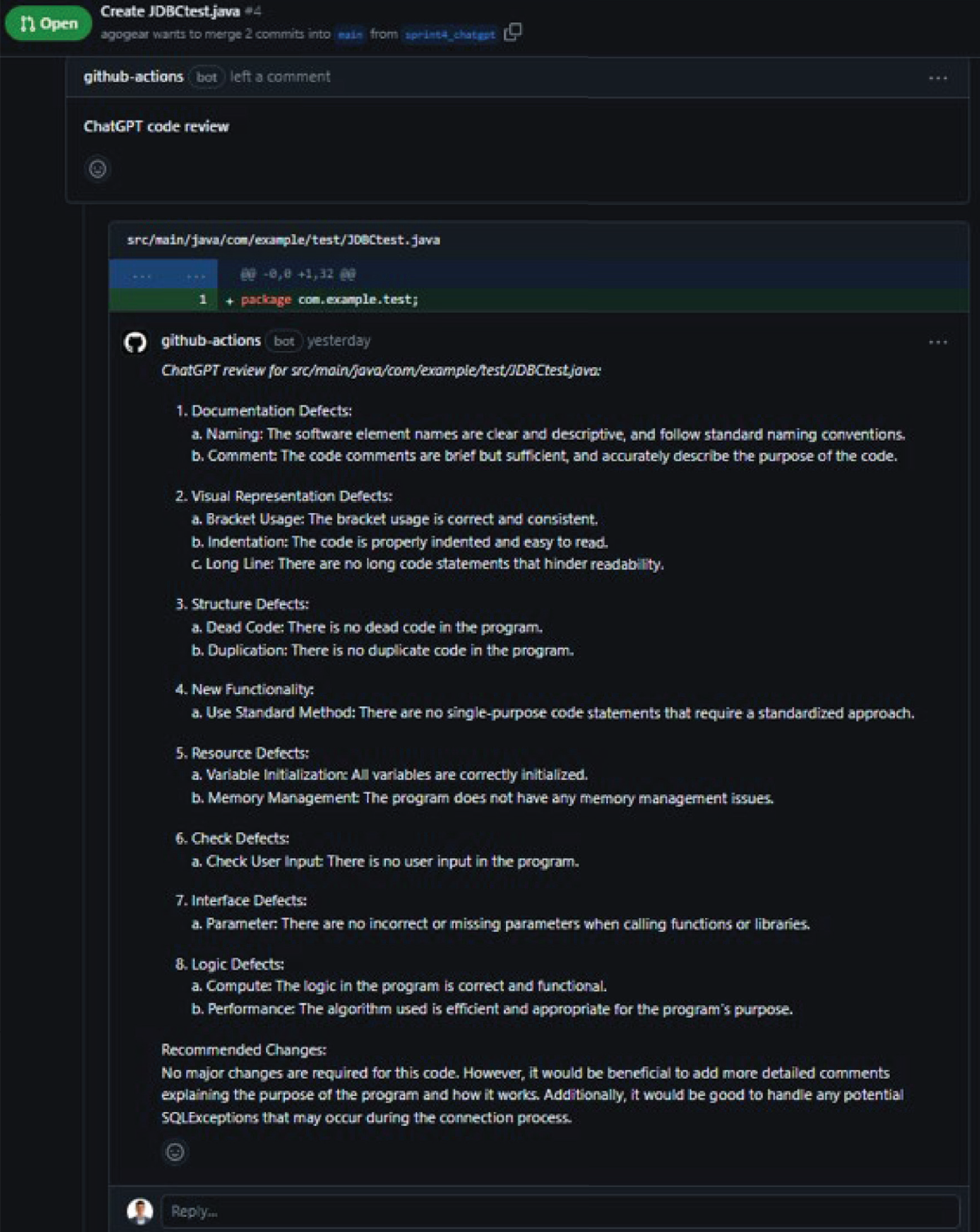

In our initial trial, results from ChatGPT are displayed inside a revision management system (GitHub). By doing this we did not change the current development process that is adopted by most engineering students. One major advantage of doing this means that a student doesn’t need to sign up for, or learn how to use, ChatGPT before they can get useful feedback on their work.

We are currently collecting data and evaluating the impact of the use of ChatGPT to students’ learning on code quality and review. Findings will be disseminated later this year, however an example of the output from ChatGPT can be seen in Figure 3.

Although this use-case has focused on code review, the process could easily be adapted to many other review processes, particularly where there is a well-defined rubric or standard it can be measured against. One example in a chemical engineering context would be to check process design specifications for compliance, against standards, or government legislation (eg EPA regulations). So it’s conceivable for an AI-powered reviewer to become a normal cross-check in a sign-off chain, on engineering drawings or design calculations, but subject to appraisal from a human engineer – like a spelling/grammar checker for design work.

Adapted Socratic method

The Socratic method of inquiry in education is a form of cooperative debate between individuals, predicated on asking and answering questions to stimulate critical thinking. In this adapted use-case, the generative AI becomes a participant in the discussion, with a small group of students (optimally three students). The students are directed to interrogate the AI on a specific topic (for example the concept of entropy) until they can identify an error in the responses, which they then highlight and discuss with an instructor (tutor or lecturer). Rather than finding answers, this activity frames the AI as an unreliable participant, and students are primed to search for errors (to proofread, rather than blindly generate content).

The activity develops two learning outcomes:

- Comprehension of content.

In order to be able to identify false information, students need a base level of understanding of the core material. The process of interrogating ChatGPT gives a focal point for a discussion of the theoretical content among the students. The discussion between the students, as they make sense of their shared understanding, is the key point of learning. It is based on a constructivist approach to teaching: the idea that people do not acquire knowledge by passively receiving it, but rather construct understanding through experiences and social discourse (in this case, discussion with other students to create meaning). - AI proficiency.

The activity also functions to train students with the use and limitations of the AI tool. By specifically using the tool until it breaks, students gain a better understanding of its appropriate use-cases, and by focusing on the errors in the AI tool it also emphasises that it is not an omniscient oracle. This process encourages students to think critically and appraise the output from the AI.

Conclusion

The landscape is shifting quickly, and generative AI holds great potential for revolutionising the way we learn, communicate, and work in various domains, including education. Through the use-cases outlined in this article, we have demonstrated how generative AI models like ChatGPT can assist students in ideation, code review, critical analysis, and in developing an understanding of theoretical content. There are already examples of AI empowering education tools: Khan Academy and Duolingo have both recently announced AI-powered online tutors. As the field of AI continues to evolve we can expect more innovative applications, paving the way for more seamless and intuitive interactions between humans and AI.

Generative AI tools are already supplementing lower-order teaching work, like marking and classroom content creation. Generative AI can also analyse large data sets, to identify where students need additional support. For students, encouraging AI tutors can provide individual and adaptive support. It can improve access to education, for example with text-to-speech software (for visually impaired students) and ultimately high-fidelity real-time translation, for cross-linguistic instruction. Students are already making the switch: online tutoring service Chegg saw its share price drop by almost 50% on 1 May 2023, after an earnings call in which they admitted they were losing customers to ChatGPT. So the future of education with generative AI is disruptive and transformative, but also promising and exciting, and we are only at the beginning of what is possible.

This is the second in a series of articles where colleagues will explore various aspects of digitalisation of engineering education in more detail. To read more articles in this series, as it develops, visit: https://www.thechemicalengineer.com/tags/digitalisation-of-engineering-education-series/

References

- Chong, C.Y., et al. Assessing the students’ understanding and their mistakes in code review checklists: an experience report of 1,791 code review checklist questions from 394 students. 2021 IEEE/ACM 43rd International Conference on Software Engineering: Software Engineering Education and Training (ICSE-eachSEET) 2021; 20–9.

- Long, D., et al. What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI conference on human factors in computing systems 2020; 1-16.

- Shull, F., et al. Inspecting the history of inspections: An example of evidence-based technology diffusion. IEEE software 2008; 25(1):88-90.

- Wilkerson, J.W., et al. Comparing the defect reduction benefits of code inspection and test-driven development. IEEE transactions on software engineering 2011; 38(3):547-60.

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.