Next-generation Predictive Tools for Multiphase Systems

Omar Matar explains how researchers are combining machine learning and physics-driven approaches in multiphase systems to develop open-source toolkits for the wider community

Multiphase systems are central to almost every facet of manufacturing, energy, and healthcare, which are of critical importance for a healthy, prosperous, and resilient planet. Understanding and controlling multiphase flow phenomena are the challenges identified in the PREMIERE (PREdictive Modelling with QuantIfication of UncERtainty for MultiphasE Systems) project (2019–present), a £6.56m (US$8.2m) EPSRC-funded, four-university programme1 supported by a number of global industries and healthcare partners.

Major uncertainties in manufacturing and energy were all anticipated when the PREMIERE programme was conceived back in 2016. These uncertainties stem from volatility in demand, currency, and energy prices, as well as disruptions in supply-chains; Covid-19, for instance, has linked acute vulnerabilities in the UK healthcare system to large-scale supply disruptions. All of the above challenges have a profound effect on companies’ emissions, level of investment, resilience, and, ultimately, survival, with clear implications for the global economy and prosperity. Overcoming these challenges can only be achieved by developing an agile and highly networked base relying on data, analytics, prediction, and research and development (R&D) to drive innovation and efficiencies.

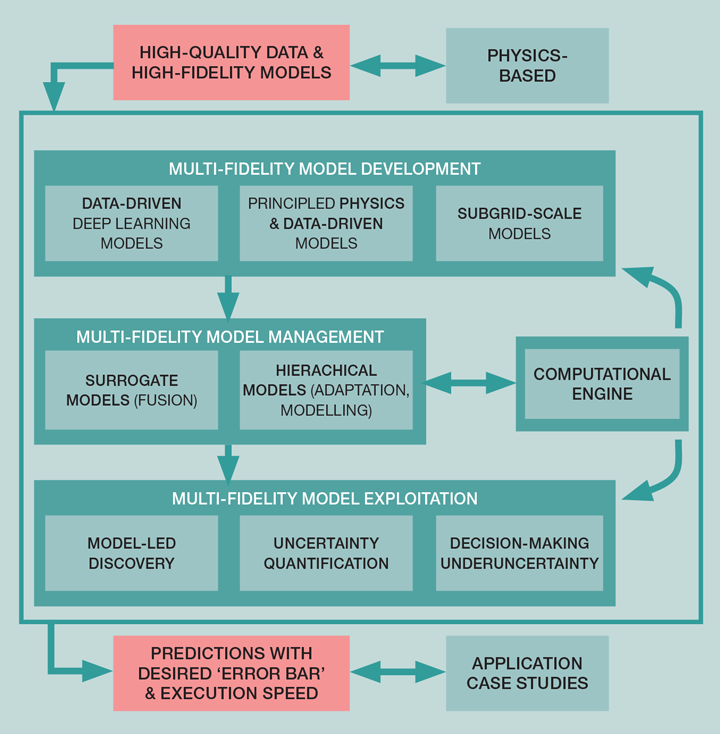

PREMIERE brings together a multi-disciplinary team of researchers to create multi-sector impact through the creation of a next-generation predictive framework for complex multiphase systems (see Figure 1). The framework blends physics-driven computational fluid dynamics (CFD), data analytics, and machine learning approaches, informed by cutting-edge experiments. This leads to the creation of reliable ‘multi-fidelity’ models that furnish solutions with the desired ‘accuracy vs efficiency’ trade-off. The PREMIERE team’s synergy in terms of multi-disciplinary expertise, and their long-standing industrial collaborations, ensure that PREMIERE is leading a paradigm-shift in multiphase flow research worldwide towards data-driven approaches, which are both physics-informed (observations from physical experiments) and physics-constrained, meaning that any solution is physically realisable (for example, satisfyng mass and energy conservation).

Data-driven models are a product of the data upon which they are trained, and thus, a good model will require high-quality data and a comprehensive training regime. However, due to various constraints associated with data collection and processing, particularly in complex multiphase systems of ‘real-world’ applications, these data sets may be too big or small, too unstructured, heterogeneous, noisy, or incomplete, limiting the ability to train an accurate data-driven model. Our researchers are currently working to overcome these issues and are also developing the PREMIERE Open-Source Software Toolkit (POSST) which will provide well-documented, user-friendly numerical routines and libraries, offering much needed guidance for the non-expert industrialists and academics to which machine/deep learning and CFD routines can be used depending on their need. POSST is part of the general-purpose and data-agnostic DataLearning toolkit developed at Imperial College London, in work led by Rossella Arcucci, and will be made available on GitHub for industrial and academic partners, and the wider STEM community.

We have found it conducive in PREMIERE to focus our efforts on a set of impactful case studies chosen to reflect the multiphase challenges highlighted above that require multi-fidelity solutions. These case studies involve a varied combination of theory and experiments, from machine learning and simulations to microfluidics and large-scale pipe flows and fluidised beds, used to address industrial, energy, and healthcare applications.

Machine learning for design of experiments

One case study involved overcoming the challenges of synthesising micro- and nanoparticles with tailored properties using microchannel reactors. To address this challenge, the PREMIERE researchers deployed a combination of characterisation techniques (eg spectroscopy, microscopy, and light scattering) to measure particle size distribution, design of experiments and machine learning, and physics-driven models in order to predict particle size in the final product(s). The inputs were process parameters and a choice of reactants.

Our researchers conducted experiments to simulate the size of silver nanoparticles2. The developed method makes use of kinetic nucleation and growth constants derived from a separate set of experiments to account for the chemistry of synthesis, the impact of mixing within the device, and storage temperature for particle stability after collection. The results demonstrate that well-designed experiments can successfully contribute to the creation of several efficient and reliable models for predicting the size distribution of micro- and nano-particles2.

The results demonstrate that well-designed experiments can successfully contribute to the creation of several efficient and reliable models for predicting the size distribution of micro- and nano-particles

Recent Editions

Catch up on the latest news, views and jobs from The Chemical Engineer. Below are the four latest issues. View a wider selection of the archive from within the Magazine section of this site.